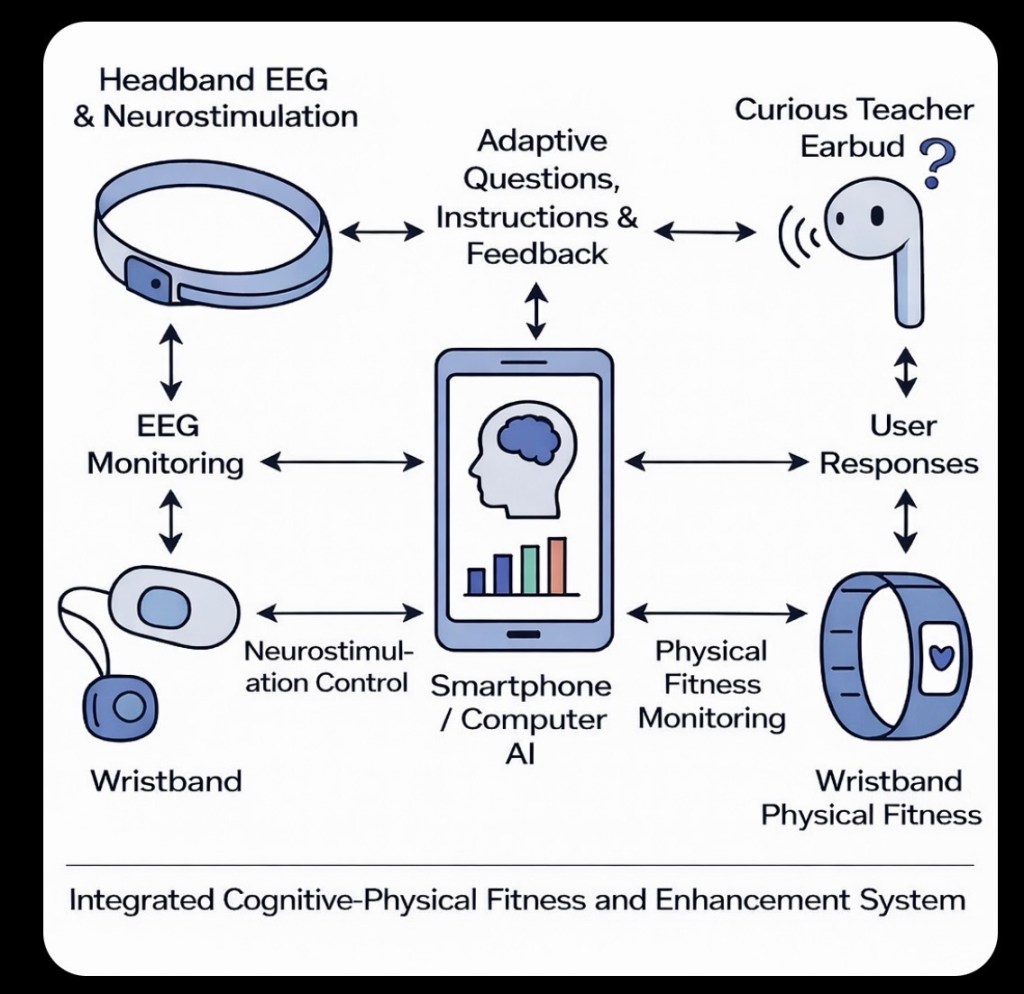

Wouldn’t it be wonderful if your workout actually made you smarter? Imagine sitting on a sleek recumbent bike, pedaling steadily while wearing a “Brainaid” headband. Your body powers the machine; your brain powers your future.

On the screen in front of you, an Artificial Socratic Intelligence (ASI) doesn’t just count your calories — it questions your assumptions. You’re thinking harder, not just sweating harder. And in this new kind of fitness salon — equal parts gym, spa, and think tank — you’re training the two most important organs for survival: your body and your brain.

Here’s the wild part: your brain runs on about 10 watts of power — the same as a dim light bulb — yet it can do things no gigawatt data center can. So imagine a collective of 100 enhanced humans, each equipped with ASI guidance and Brainaid synchronization. Together, this “10-Watt Collective” would have the cognitive power of a supercomputer cluster, but running on less energy than a single streetlight. It’s the opposite of the arms race for faster chips and bigger clouds. Instead of energy-hungry machines replacing us, humans become smarter, stronger, and more efficient — the power plants of intelligence itself.

But there’s a catch. If such a collective were to emerge, it could also become dangerous. History is full of examples — from Hitler’s inner circle to modern autocratic regimes — of tight, obedient collectives gone terribly wrong. When shared intelligence turns into shared obedience, creativity dies. And when people stop questioning authority, evil slips in quietly.

The same thing could happen to an AI-assisted collective. If the Socratic questions stop, if the AI starts reinforcing rather than challenging, the group could turn into a perfect machine for self-delusion — efficient, confident, and utterly wrong.

We are in danger of letting our brains go soft. Not from lack of food or oxygen, but from lack of thinking. When people stop questioning, they start repeating. When they stop wondering, they start following. In the past, dictators used fear and lies to make people stop thinking. Today, it’s comfort, distraction, and endless scrolling. The machine tells us what to believe, what to like, even what to feel — and we let it. Maimonides said being perplexed — confused, uncertain — is a good thing. It means your brain is still working. But the lazy brain wants easy answers, and that’s where evil begins. If AI becomes just another way to make people stop thinking, then we are building the perfect trap — fast, smart, and completely unreflective. That’s how collectives, even well-meaning ones, turn dark. The only cure is curiosity.